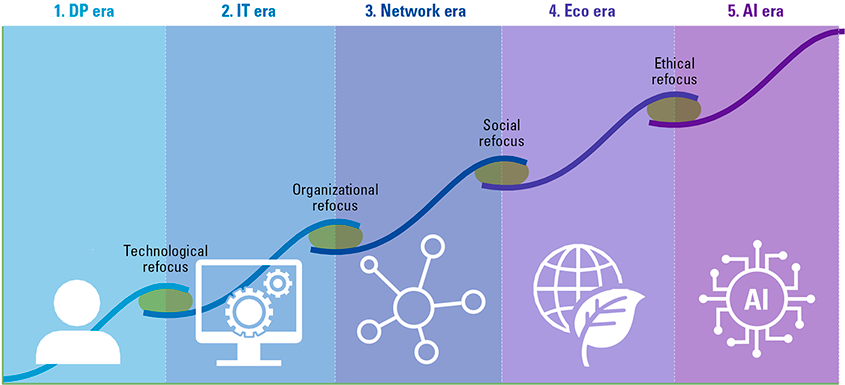

The first computers made their appearance in the 1950s. Socially, this produced a mix of enthusiasm and fear. Enthusiasm about the possibilities, fear of the negative side effects. Now that artificial intelligence is breaking through, that mix is also visible again. Partly for this reason, it is good to fathom the enormous development of information technology during that time. From specialist departments developing rigid and centralized systems to users taking control themselves and being able to respond hyperflexibly to possibilities.

For the Dutch version of this article, see: Wie zijn verleden kent, begrijpt zijn toekomst

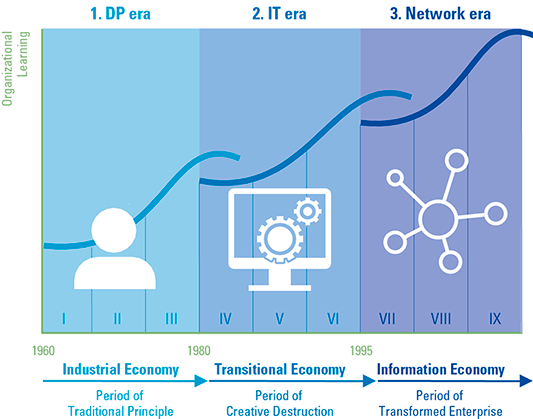

Without information technology, our society comes to a standstill. This has not always been the case. An analysis of recent decades reveals a number of phases.

Phase 1: The start – data processing (DP)

When the first computer – the PTT-developed PTERA – went into service in the Netherlands in 1953, no one could have imagined what this would turn into more than half a century later. The first steps were mainly taken by large companies such as Philips, Fokker, Shell and financial institutions. Computers were expensive machines (mainframes) the size of a hall or an entire building and could do very little. It did slowly become clear that great organizational advantages lay ahead, including through the growth of computing power and data storage.

Figure 1. A computing center in 1967. [Click on the image for a larger image]

Automation was initially the exclusive domain of central automation departments. The heads of these departments, the EDP managers (EDP: Electronic Data Processing) distributed budgets and made decisions about the development of technology. It is totally unthinkable now, but back then neither the user nor management was involved. Programmers built customized systems for the organization since no off-the-shelf packages were available in the market. In fact, they combined several functions: operator, programmer, system analyst and information analyst.

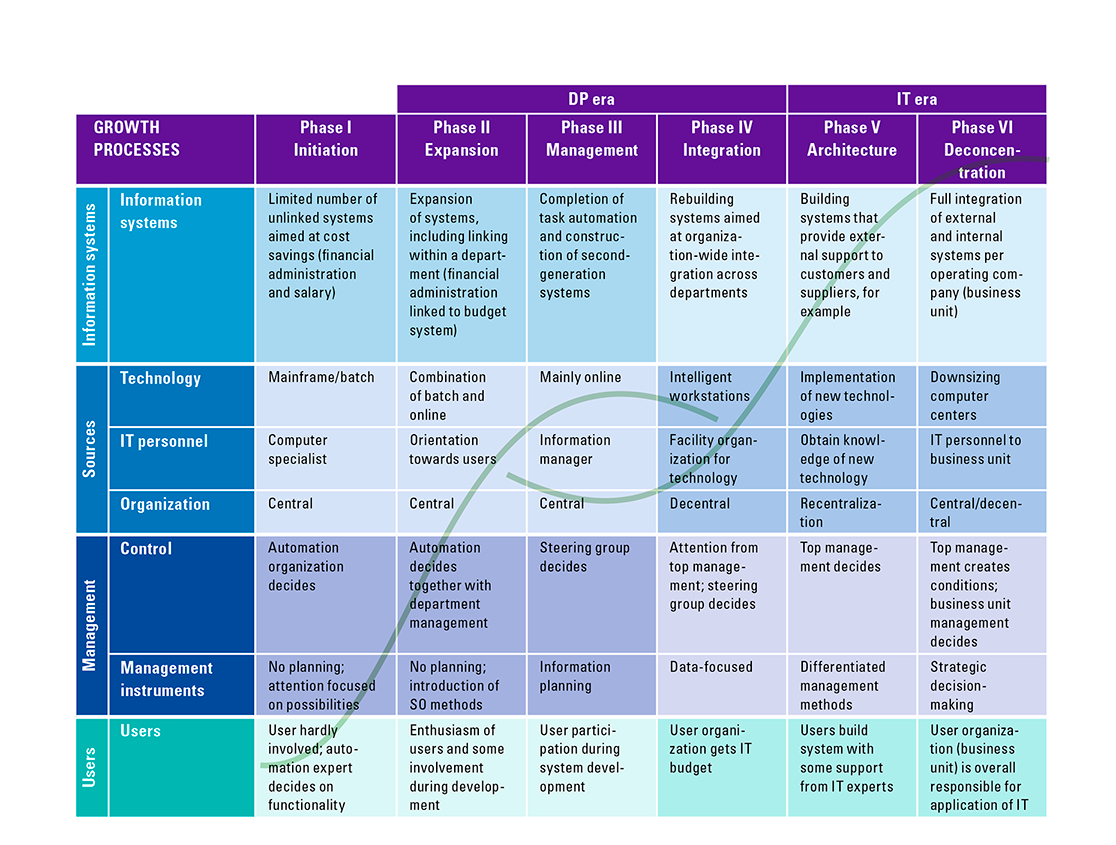

In 1973, Harvard professor Dick Nolan wrote a famous paper on how automation goes through different stages of maturity. He soon turns out to have excellent foresight. In the early 1980s, automation becomes commonplace in almost all companies and professionalization sets in. This can be noted, for example, in the emergence of system development methodologies, such as SDM (System Development Methodology) by Pandata (now Capgemini). Organizations are also starting to work more policy-wise on information and automation plans and, as a result, users – employees who use automated applications on a daily basis – will have input into these plans.

The introduction of the personal computer in the mid-1980s was an unmissable acceleration in this period, prompting widespread social adoption, also helped by “pc-private” programs by organizations that provide their employees with a computer for home use.

Figure 2. The first two eras: data processing and IT. [Click on the image for a larger image]

Phase 2: From automation to information technology

The dynamism and speed of society is increasing and has an impact on how companies organize themselves. The classic hierarchical pyramid works fine in a stable environment but quickly proves too cumbersome in terms of decision-making for such a dynamic environment. New organizational forms that respond to this are emerging and introduce more speed and flexibility.

At the same time, the capabilities of information technology are growing rapidly and are quickly becoming affordable. This cannot be separated from Moore’s Law, which shows that the price of information storage and information processing capacity halves every 18 months ([Down88]). IT is getting cheaper and smaller and can be used anywhere. One of the effects is that all kinds of business functions are connected, which soon results in a process-oriented organizational form. Until then, departments/business functions (such as purchasing, production and sales) were central; now business processes are becoming central. They are redesigned from product design and product realization to service delivery. The business becomes intertwined with and through IT.

Figure 3. The mass entry of the personal computer. [Click on the image for a larger image]

The second Nolan curve therefore encompasses the era in which classic “pyramids” are transforming into process-driven organizations. Business Process Redesign (BPR) is the name of the game: this collection of methods and techniques is at the heart of the transformations.

One consequence is that the automation department and its head (now renamed IT manager) are losing power. Many organizations are even outsourcing system development and management entirely and switching to packages available in the market. During this time, the position of CIO (Chief Information Officer) emerges, whose role is to bridge the gap between the technologists and business management.

This bridging function is one of the key success factors of the time, and models such as Henderson and Venkatraman’s 2×2 model and Rik Maes’ 3×3 Amsterdam nine-plane model play into this. Despite these tools, it often proves to be a tough challenge and many a CIO grinds on it. ‘Career Is Over’ was a popular play on words about the CIO at the time. Other members of top management are also beginning to take an interest in the subject of IT, in the growing realization that it is a crucial strategic issue. The emergence of all kinds of personal IT – such as the laptop – is helping that awareness tremendously.

In this phase, we also see the first signs that boundaries between organizations are becoming fluid. Organizations are beginning to make choices about which tasks are not core competencies. These are being outsourced to other organizations. It is the prelude to the networking era.

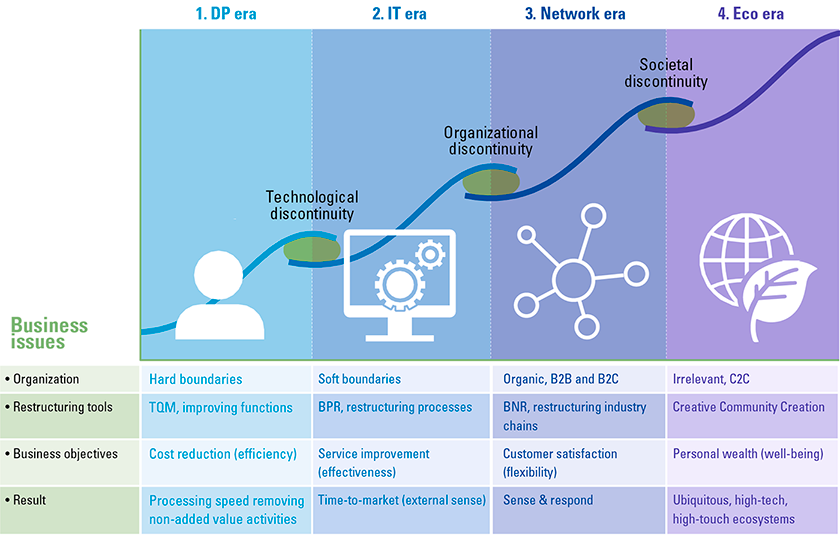

Phase 3: The emergence of the networking era

In this phase, the role of IT increasingly transcends the traditional boundaries of the organization. Organizations are increasingly transferring secondary processes to Shared Service Centers and/or outsourcing non-core tasks. The number of collaborations with other organizations is growing, as is their intensity. Individual organizations no longer have hard boundaries and that also means that changes in business processes (and therefore IT) impact entire chains. Innovation, such as introducing new or enriched products, services and distribution channels, becomes an ongoing process, as does entering new markets ([Nola98]). IT becomes intertwined with all aspects of business and with virtually everything that happens in the business and its environment: with customers, suppliers and (chain or ecosystem) partners.

Figure 4. Networking makes its appearance. [Click on the image for a larger image]

Traditional mainframes, massive data processing and distributed IT are not disappearing, but the focus is changing. A company’s distinctiveness primarily derives from properly organizing the communication and distribution of information and knowledge within networks. Internet plays a key role in this and is soon putting the turbo on the creation of a hyperconnected society. Digitalization is becoming en vogue.

Figure 5. Development of networks and network organizations. [Click on the image for a larger image]

Once again, this development is impacting how decisions are made about IT, as IT becomes more intertwined with business strategy than ever before. IT is no longer (only) about transactions but (mainly) about a seamless exchange of knowledge and information. This requires generic features such as customer relationship management, workflow management, groupware, embedded software, multimedia and mobile data communications. Underneath, strong and flexible infrastructures (digital backbones) are needed to tie everything together. Anything, anyone, any time, any place, any device. Those backbones include a multitude of facilities, from hard-wired to wireless, and are increasingly in public and private clouds.

As a result of all the above, decision-making on IT becomes a joint matter of top management, business unit management and individual cells in collaborating and networking organizations. The bridge function of the CIO is no longer necessary in the network era ([Muts13]). After all, business management now has the knowledge and skills to make the translation from business to technology. The specific “translation function” of the CIO has therefore become superfluous ([Zee99]). Their career is indeed over.

Phase 4: Ecosystems

The aforementioned developments are continuing and accelerating. One notable development is the miniaturization of IT that provides the basis for even more connectivity. The number of sensors exchanging data over the internet is growing and is in all kinds of products, such as cars, washing machines, boilers or coffee machines, and also includes numerous applications for people to provide customized services ranging from an automatically customized car seat to finding a suitable date with the right sexual preferences.

The Internet-of-things-and-lives is taking shape and an entirely new social ecosystem is emerging. People use hundreds of computers and internet connections every day, business and personal, often without realizing it. Communication between all these computers takes place through an array of standardized WANs (wide area networks), LANs (local area networks) and PANs (personal area networks). For that transfer of information, not the hopelessly obsolete, text-oriented keyboards are used, but user interfaces that can handle all sensory transfer techniques – speaking, hearing, seeing, tasting, smelling and feeling.

Figure 6. Everything is connected to everything. [Click on the image for a larger image]

Information technology is more essential than ever to the normal functioning of society, and as its use grows, its returns increase quadratically (Metcalfe’s Law). If you look at it from a distance, you will see developments on three fronts that interact: (1) much greater connectivity, (2) the ability to deal with great complexity due to growing computing power, and (3) the replacement or enrichment of existing physical products with information.

All in all, a fourth, new development curve has emerged, building on Nolan’s three phases. Van der Zee coined this as “the eco era” ([Zee00]) in 2000, a term indicating an even more organic and fluid composition of society, economy and business, and the involvement of all who play a part in it.

Figure 7. The emergence of ecosystems. [Click on the image for a larger image]

In this era, information technology enables everyone to access and provide access to all kinds of information, to communicate with each other and to engage in transactions among themselves. Virtual, networked organizations are emerging that can do all this independent of time and location. The possibilities are virtually limitless.

The electronic exchange of information and knowledge therefore also constitutes an entirely new economic and social order. The strategic question that entrepreneurs, and therefore CIOs, must ask themselves at this stage is: how do we ensure that we are an IT-based, virtual, networked organization that is part of a larger, networked world?

The answer to this question lies in ideas for improving existing products, services, markets, distribution channels and organizational forms – or better yet, for developing entirely new products. These good ideas – often only possible with the application of new technology – require creativity and originality, of course, but above all they require implementation power (in a social, technological, organizational, economic and political sense). Whoever is best able to bring the ideas from the drawing board to practice will be tomorrow’s winner.

Phase 5: The AI era

The rise of artificial intelligence (AI) heralds yet another new phase. In this phase, creativity and pioneering deployment become decisive. This is because AI and data-driven algorithms are creating autonomy from technology. Technology has become “common standard,” widely available and with seemingly infinite capacity and capabilities (the digital backbone). Complicated problems and challenges become easier to solve due to the advancing capabilities of technology, think for example of far-reaching automation and optimization of logistics, predicting customer behavior and anticipating it automatically or detecting fraud in huge amounts of data traffic.

One landmark moment is the introduction of ChatGPT, a Large Language Model (LLM) that is not so much groundbreaking in terms of technology, but more importantly makes the use of AI childishly simple and accessible and manages to build a huge user base in a short period of time. Companies can deploy chatbots that, for example, answer customer questions about products or services much faster and more accurately than a traditional service desk – 24/7 and without queues. And that without having to build a chatbot themselves, but by training standard available chatbots with good, relevant data.

Figure 8. AI and robotization. [Click on the image for a larger image]

Looking back at this development, one of the things you see is that new issues are emerging about how humans and machines work together. The simplicity in the use of AI tools has reached unprecedented levels, while the underlying complexity is understood by few. It often involves a highly complex cocktail of hard engineering, software code and sophisticated analytics. Issues such as security, governance and ethics also come into play. Building an application therefore requires a combination of specialists, each of whom contributes high-quality knowledge in their own field, but none of whom understand all the details.

This is a trend that is spreading more broadly; we can create increasingly simple user shells around an increasingly complex core. Programmers can work with modern programming languages without too much knowledge of what the code does under the hood. “The future of coding is no coding at all,” former Github CEO Chris Wanstrath said of it. Because in this era, any user can suddenly program themselves.

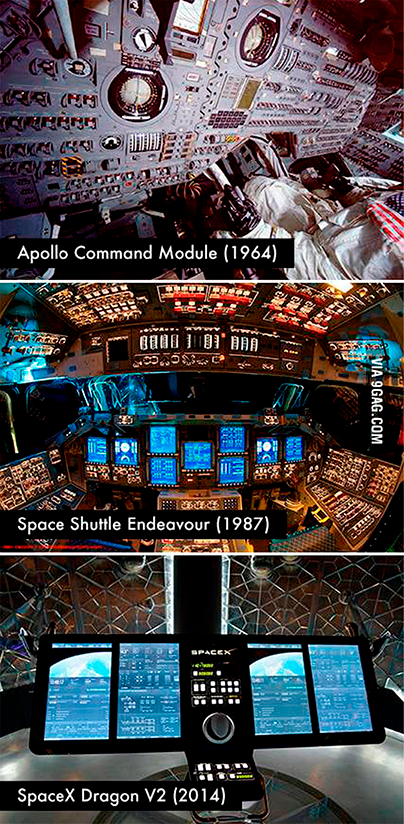

A good analogy in this regard is the development of user interfaces of a spacecraft. An online search on “Apollo Shuttle Dragon” produces a beautiful visual showing how astronauts now no longer need to know the operation of hundreds of buttons but have a pair of clear touchscreens in front of them. The beauty of it is that even though there is no one overseeing the whole thing, it still works. Apparently, we can work together wonderfully as human beings. One consequence, however, is that the operation of the whole thing under the hood is virtually impossible to explain.

Figure 9. AI accessible to all ( (source: https://www.reddit.com/r/spacex/comments/4cfnzo/the_evolution_of_space_cockpits_apollo_shuttle/?rdt=64418).

This is an issue in the case of the rise of AI. Are we as humans willing to use such applications even if no one can oversee or explain the whole thing? And what about if something goes wrong? Which is not an unthinkable scenario with the first versions of LLMs that demonstrably make mistakes or generate information that does not exist (called “hallucinating” in professional jargon). In this context, the comparison with a Japanese kitchen knife is frequently made; AI provides very useful tools, but it could be life-threatening if you don’t know how to handle it.

Numerous new questions therefore arise in this phase. How and where can we optimize our business model using AI? What (social) problems do we need to solve? How do we arrive at responsible applications? How do we ensure that we properly safeguard ethical aspects? How can we ensure that the reliability of the outcomes remains up to par? And in a social sense; how do we ensure that we as humans continue to function autonomously, without too much influence from technology?

What does this phase mean for how organizations should handle their technology landscape? One clear development in architectures is that application functionality is being separated from the data layer. As a result, data has become widely available and much more accessible. Combined with the greatly increased availability of processing and storage power, it becomes possible to organize and classify that data and recognize patterns. This offers the new opportunities and insights in which algorithms and AI can play an important role in speeding up and enriching processes and services and even enabling completely new services.

The underlying technological infrastructure or digital backbone is the responsibility of the Chief Technology Officer, who decides what to do himself and what to outsource to partners. In addition, the Chief Information Security Officer in the field of security and the Chief Data Officer in the field of data (quality) and data management play an important role, the latter in consultation with the owners of the data in the business. The role of the CIO seems to have disappeared at this stage, but we do see new roles, such as a Chief Digital Officer who facilitates the business in the ever-increasing digitalization throughout the chain (including suppliers, partners and customers/citizens). Another possibility is that the Chief Ethics Officer will make their appearance, particularly to set and monitor ethical frameworks.

Conclusion

There is considerable fear in the current era about negative effects of AI, prompted in part by dark stories in the media. In a sense, this has come full circle. When the first computers arrived in the 1950s, there was also resistance. In fact, this plays out with almost every new technology; there is always a bright side and a dark side in the application. The challenge is always to manage the dark side as well as possible and to leverage the wonderful sides in a responsible way. That has been achieved reasonably successfully over the past fifty years. There is really no reason to believe that it will not be achieved in the next fifty years as well.

The authors were fellow consultants until 2002 at the then renowned consulting firm Nolan, Norton & Co, acquired by KPMG in 1988, and were engaged in advising and guiding leading international organizations in their growth in the application of IT in the various eras described, the so-called Stages of Growth. Starting in 2002, they followed different growth paths and in early 2023 they met to write this article and share their personal experiences of the past 50 years.

References

[Down88] Downes, L & Mui, C. (1988). Unlashing the Killer App: Digital Strategies for Market Dominance. Harvard Business Review.

[Muts13] Mutsaers, E.J., Koot, W.J.D. & Donkers, J.A.M. (2013). Why CIO’s should make themselves obsolete. Compact, 2013(4). Accessed at: https://www.compact.nl/en/articles/why-cios-should-make-themselves-obsolete/

[Nola98] Nolan, R.L. & Bradley, S.P. (1998). Sense & Respond: Capturing Value in the Network Era. Harvard Business School Press.

[Zee99] Van der Zee, J.T.M. & de Jong, B. (1999). Alignment is not Enough: Integrating IT in the Balanced Business Scorecard. Journal of Management Information Systems, 16(2) (Fall, 1999), 137-156.

[Zee00] Van der Zee, J.T.M. (2000). Business Transformation and IT: Vervlechting en ontvlechting van ondernemingen en informatietechnologie. Oration Katholieke Universiteit Brabant, Dutch University Press, Tilburg.